18.2 Least-squares regression analysis

When we are only interested in the strength of a linear association between two numerical variables, we use a correlation analysis.

When we are interest in making predictions, we use regression analysis.

Often in the literature you see authors reporting regression results when in fact there was no rationale for using regression; a correlation analysis would have been more appropriate.

The two analyses are mathematically related.

Regression analysis is extremely common in biology, and unfortunately it is also common to see incorrect implementation of regression analysis. In particular, one often sees scatterplots that clearly reveal violations of the assumptions of regression analysis (see below).

Failure to appropriately check assumptions can lead to misleading and incorrect conclusions

18.2.1 Equation of a line and “least-squares line”

Recall from your high-school training that the equation for a straight line is:

\(Y\) = a + bX

In regression analysis, the “least-squares line” is the line for which the sum of all the squared deviations in Y is smallest.

In a regression context, a is the Y-intercept and b is the slope of the regression line.

Regression analysis falls under the heading of inferential statistics, which means we use it to draw inferences about a linear relationship between \(Y\) and \(X\) within a population of interest. So in the actual population, the true least-squares line is represented as follows:

\(Y\) = \(\alpha\) + \(\beta\)\(X\)

In practice, we estimate the “parameters” \(\alpha\) and \(\beta\) using a random sample from the population, and by calculating a and b using regression analysis.

The \(\alpha\) in the regression equation has no relation to the \(\alpha\) that sets the significance level for a test!

The equation for the true least-squares line shown above uses the equation shown in the course text book. However, an alternative way to write the equation is as follows, and includes an error term “\(\epsilon\)”:

\(Y\) = \(\beta_0\) + \(\beta_1\)\(x\) + \(\epsilon\)

18.2.2 Hypothesis testing or prediction?

In general, there are two common scenarios in which least-squares regression analysis is appropriate.

We wish to know whether some numeric variable \(Y\) can be reliably predicted using a regression model of \(Y\) on some predictor \(X\). For example, “Can vehicle mileage be reliably predicted by the weight of the vehicle?”

We wish to test a specific hypothesis about the slope of the regression model of \(Y\) on \(X\). For example, across species, basal metabolic rate (BMR) relates to body mass (M) according to \(BMR = \alpha M^\beta\). Here, \(\alpha\) is a constant. If we log-transform both sides of the equation, we get the following relationship, for which the parameters can be estimated via linear regression analysis:

\(log(BMR) = log(\alpha) + \beta\cdot log(M)\)

Some biological hypotheses propose that the slope \(\beta\) should equal 2/3, whereas others propose 3/4. This represents an example of when one would propose a specific, hypothesized value for \(\beta\).

In the most common application of regression analysis we don’t propose a specific value for the slope \(\beta\). Instead, we proceed with the “default” and unstated null and alternative hypotheses as follows:

H0: \(\beta = 0\)

HA: \(\beta \neq 0\)

In this case, there is no need to explicitly state these hypotheses for regression analysis.

You must, however, know what is being tested: the null hypothesis that the slope is equal to zero.

18.2.3 Steps to conducting regression analysis

In regression analysis one must implement the analysis before one can check the assumptions. Thus, the order of operations is a bit different from that of other tests.

Follow these steps when conducting regression analysis:

- Set an \(\alpha\) level (default is 0.05)

- Provide an appropriate figure, including figure caption, to visualize the association between the two numeric variables

- Provide a sentence or two interpreting your figure, and this may inform your concluding statement

- Conduct the regression analysis, save the output, and use the residuals from the analysis to check assumptions

- Assumptions

- state the assumptions of the test

- use appropriate figures and / or tests to check whether the assumptions of the statistical test are met

- transform data to meet assumptions if required

- if assumptions can’t be met (e.g. after transformation), use alternative methods (these alternative methods are not covered in this course)

- if data transformation is required, then do the transformation and provide another appropriate figure, including figure caption, to visualize the transformed data, and a sentence or two interpreting this new figure

- state the assumptions of the test

- Calculate the confidence interval for the slope and the \(R^2\) value, report these in concluding statement

- If the slope of the least-squares regression line differs significantly from zero (i.e. the regression is significant), provide a scatterplot that includes the regression line and confidence bands, with an appropriate figure caption

- Use the output from the regression analysis (above) draw an appropriate conclusion and communicate it clearly

18.2.4 State question and set the \(\alpha\) level

The plantbiomass dataset (Example 17.3 in the text book) includes data describing plant biomass measured after 10 years within each of 5 experimental “species richness” treatments, wherein plants were grown in groups of 1, 2, 4, 8, or 16 species. The treatment variable is called nSpecies, and the response variable biomassStability is a measure of ecosystem stability. The research hypothesis was that increasing diversity (species richness) would lead to increased ecosystem stability. If the data support this hypothesis, it would be valuable to be able to predict ecosystem stability based on species richness.

We will use the conventional \(\alpha\) level of 0.05.

18.2.5 Visualize the data

Using the plant biomass data, let’s evaluate whether ecosystem stability can be reliably predicted from plant species richness.

We learned in an earlier tutorial that the best way to visualize an association between two numeric variables is with a scatterplot, and that we can create a scatterplot using the ggplot and geom_point functions:

plantbiomass %>%

ggplot(aes(x = nSpecies, y = biomassStability)) +

geom_point(shape = 1) +

xlab("Species richness") +

ylab("Biomass stability") +

theme_bw()

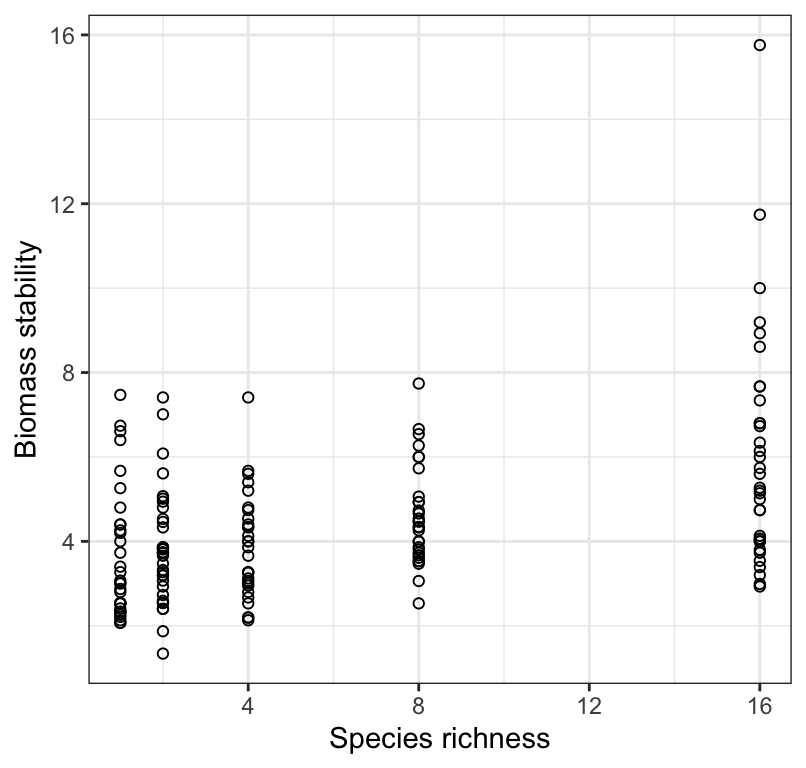

Figure 18.2: Stability of biomass production over 10 years in 161 plots and the initial number of plant species assigned to plots.

Note that in the above figure we do not report units for the response variable, because in this example the variable does not have units. But often you do need to provide units!

This figure that you first produce for visualizing the data may or may not be necessary to present with your results. If your least-squares regression does end up being statistically significant, i.e. having a slope that differs significantly from zero, then you’ll create a new figure to reference in your concluding statement (see below). If your regression analysis is non-significant, then you should present and refer to this simple scatterplot (which does not include a best-fit regression line).

18.2.6 Interpreting a scatterplot

In an earlier tutorial, we learned how to properly interpret a scatterplot, and what information should to include in your interpretation. Be sure to consult that tutorial.

We see in Figure 18.2 that there is a weak, positive, and somewhat linear association between biomass stability and Species richness. There appears to be increasing spread of the response variable with increasing values of the predictor (x) variable, and perhaps outliers in the treatment group with greatest species richness. We should keep this in mind.

18.2.7 Checking assumptions of regression analysis

Regression analysis assumes that:

- the true relationship between \(X\) and \(Y\) is linear

- for every value of \(X\) the corresponding values of \(Y\) are normally distributed

- the variance of \(Y\)-values is the same at all values of \(X\)

- at each value of \(X\), the \(Y\) measurements represent a random sample from the population of possible \(Y\) values

Strangely, we must implement the regression analysis before checking the assumptions. This is because we check the assumptions using the residuals from the regression analysis.

Let’s implement the regression analysis and be sure to store the output for future use.

The function to use is the lm function, which we saw in a previous tutorial, but here our predictor (independent) variable is a numeric variable rather than a categorical variable.

Let’s run the regression analysis and assign the output to an object as follows:

Let’s have a look at the untidy output:

##

## Call:

## lm(formula = biomassStability ~ nSpecies, data = plantbiomass)

##

## Coefficients:

## (Intercept) nSpecies

## 3.4081 0.1605We’ll learn a bit later how to tidy up this output…

Before we do anything else (e.g. explore the results of the regression), we need to first check the assumptions!

To get the residuals, which are required to check the assumptions, you first need to run the augment function from the broom package on the untidy regression object, as this will allow us to easily access the residuals.

This produces a tibble, in which one of the variables, called “.resid”, houses the residuals that we’re after.

Let’s have a look:

## # A tibble: 161 × 8

## biomassStability nSpecies .fitted .resid .hat .sigma .cooksd .std.resid

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 7.47 1 3.569 3.901 0.01177 1.707 0.03063 2.268

## 2 6.74 1 3.569 3.171 0.01177 1.717 0.02024 1.844

## 3 6.61 1 3.569 3.041 0.01177 1.718 0.01862 1.768

## 4 6.4 1 3.569 2.831 0.01177 1.721 0.01613 1.646

## 5 5.67 1 3.569 2.101 0.01177 1.727 0.008887 1.222

## 6 5.26 1 3.569 1.691 0.01177 1.730 0.005758 0.9835

## 7 4.8 1 3.569 1.231 0.01177 1.733 0.003052 0.7160

## 8 4.4 1 3.569 0.8314 0.01177 1.734 0.001391 0.4834

## 9 4.4 1 3.569 0.8314 0.01177 1.734 0.001391 0.4834

## 10 4.26 1 3.569 0.6914 0.01177 1.735 0.0009622 0.4020

## # ℹ 151 more rows“Residuals” represent the vertical difference between each observed value of \(Y\) (here, biomass stability) the least-squares line (the predicted value of \(Y\), “.fitted” in the tibble above) at each value of \(X\).

Don’t worry about the other variables in the tibble for now.

Let’s revisit the assumptions:

- the true relationship between \(X\) and \(Y\) is linear

- for every value of \(X\) the corresponding values of \(Y\) are normally distributed

- the variance of \(Y\)-values is the same at all values of \(X\)

- at each value of \(X\), the \(Y\) measurements represent a random sample from the population of possible \(Y\) values

With respect to the last assumption regarding random sampling, we can assume that it is met for the plant experiment example. In experiments, the key experimental design feature is that individuals (here, plant seedlings) are randomly assigned to treatments, and that the individuals themselves were randomly drawn from the population prior to randomizing to experimental treatments. If your study is observational, then it is key that the individuals upon which measurements were made were randomly drawn from the population.

For the first three assumptions, we start by looking at the original scatterplot 18.2, but this time we add what’s called a “locally weighted smoother” line, using the ggplot2 function geom_smooth:

plantbiomass %>%

ggplot(aes(x = nSpecies, y = biomassStability)) +

geom_point(shape = 1) +

geom_smooth(method = "loess", se = FALSE, formula = y ~ x) +

xlab("Species richness") +

ylab("Biomass stability") +

theme_bw()

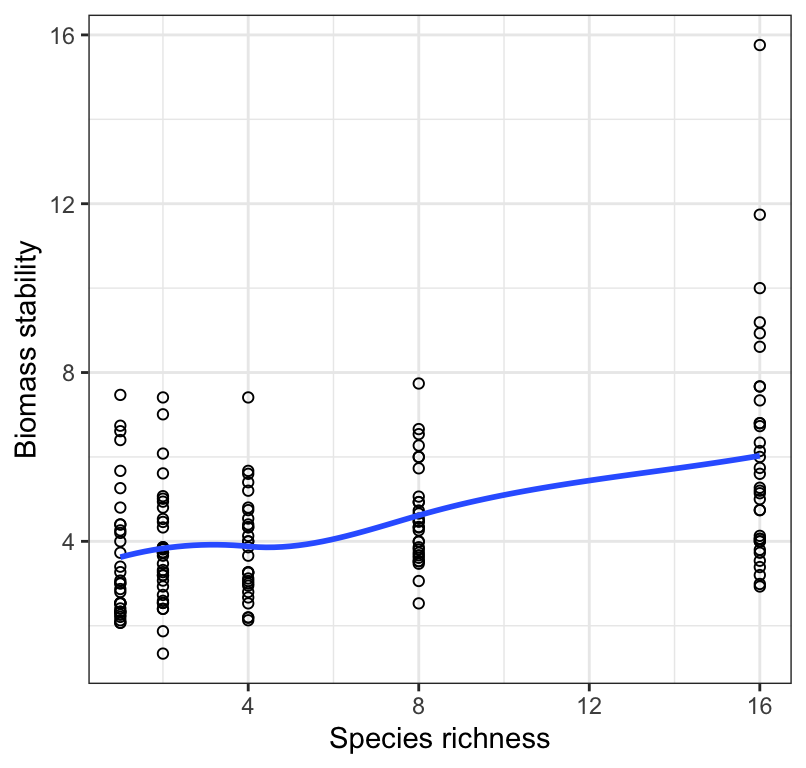

Figure 18.3: Stability of biomass production over 10 years in 161 plots and the initial number of plant species assigned to plots. Also shown is a locally weighted smoothing line.

Note the arguments provided for the geom_smooth function.

The smoothing line will make it easier to assess whether the relationship is linear or not. Sometimes it is obvious from the scatterplot that the association is non-linear (curved) (violating the first assumption), and sometimes it is obvious that the spread of the \(Y\) values appears to change systematically (e.g. get bigger) with increasing values of \(X\) (violating the third assumption).

The second assumption is better assessed using the residuals (see below).

In either case, one can try transforming the response variable, re-running the regression analysis using this transformed variable as the new “Y’” variable, then checking the assumptions again.

18.2.7.1 Outliers

Outliers will cause the first three regression assumptions to be violated.

Viewing the scatterplot, keep an eye out for outliers, i.e. observations that look very unusual relative to the other observations. In regression, observations that are far away from the general linear association can have undue influence over the best-fit line. This sounds a bit fuzzy to judge, and it is. There are more formal approaches to evaluating “outliers”, but for now use this approach: If your eye is drawn to an observation because it seems unusual compared to the rest, then you’re probably on to something. In this case, the most transparent and thorough approach is to report your regression results first using the full dataset, and then second, excluding the outlier observations from the analysis.

Two other types of graphs are used to help evaluate assumptions.

We can check the normality of residuals assumption with a normal quantile plot, which you’ve seen previously.

For this, we use the “augmented” output we created above.

biomass.lm.augment %>%

ggplot(aes(sample = .resid)) +

stat_qq(shape = 1, size = 2) +

stat_qq_line() +

theme_bw()

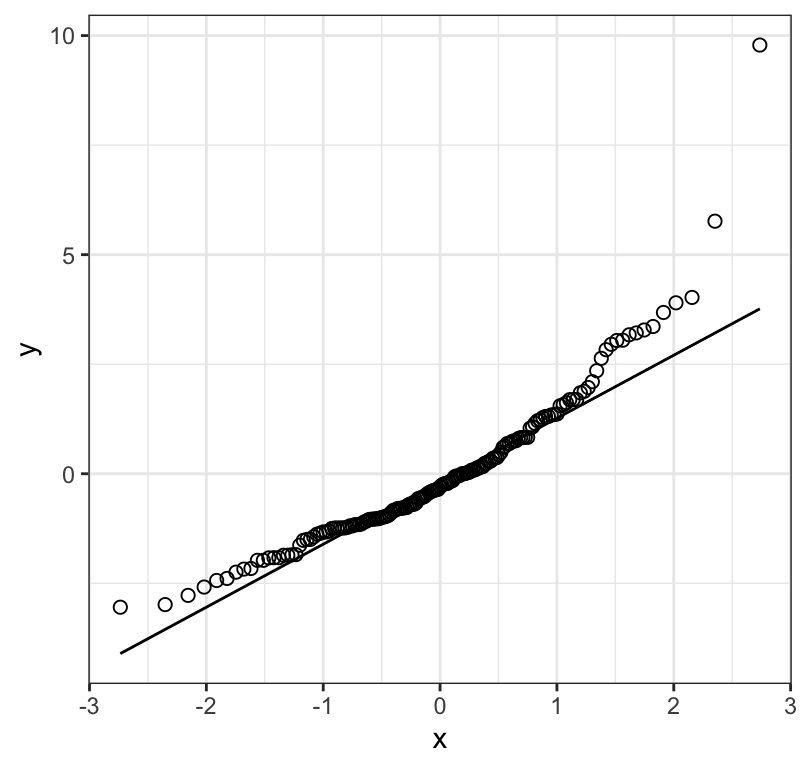

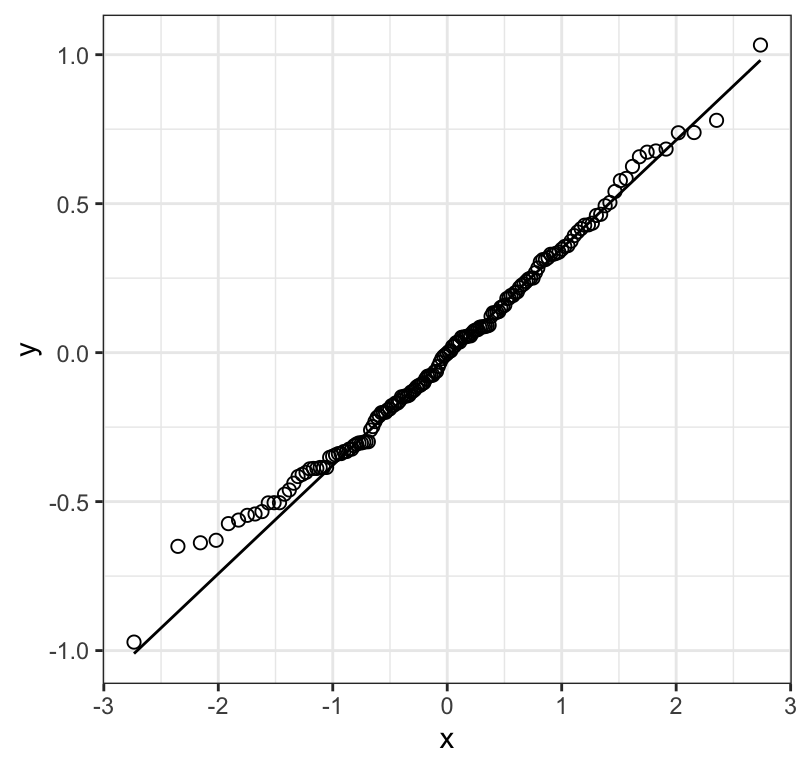

Figure 18.4: Normal quantile plot of the residuals from a regression of biomass stability on Species richness for 161 plots

Figure 18.4 shows that the residuals don’t really fall consistently near the line in the normal quantile plot; there is curviture, and increasing values tend to fall further from the line. This suggests the need to log-transform the data.

Before trying a transformation, let’s also check the assumptions that (i) the variance of \(Y\) is the same at all values of \(X\), and (ii) the assumption that the association is linear. To do this, we plot the residuals against the original “\(X\)” variable, which here is the number of species initially used in the treatment. Note that we’ll add a horizontal line (“segement”) at zero for reference:

biomass.lm.augment %>%

ggplot(aes(x = nSpecies, y = .resid)) +

geom_point(shape = 1) +

geom_abline(slope = 0, linetype = "dashed") +

xlab("Species richness") +

ylab("Residual") +

theme_bw()

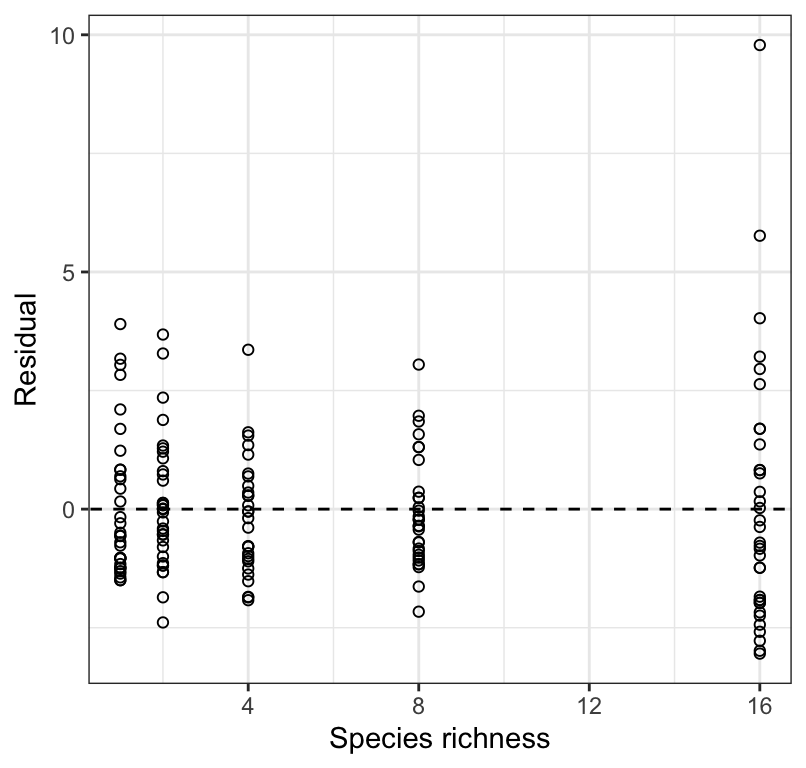

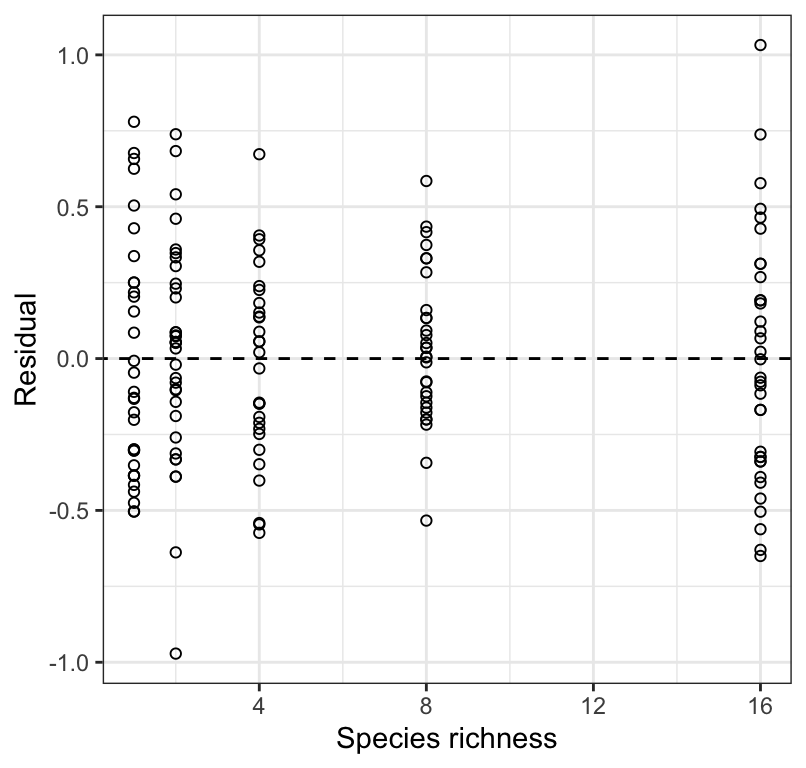

Figure 18.5: Residual plot from a regression of biomass stability on Species richness for 161 plots.

Here we are watching out for:

- a “funnel” shape, or substantial differences in the spread of the residuals at each value of \(X\)

- outliers to the general trend

- a curved pattern

Figure 18.5 shows that the variance in biomass stability is greatest within the largest species richness treatment. This again suggests the need for a log transformation.

REMINDER: If ever you see a clear outlier when checking assumptions, then a safe approach is to report the regression with and without the outlier point(s) included. If the exclusion of the outlier changes your conclusions, then you should make this clear.

18.2.8 Residual plots when you have missing values

IF there are missing values in either the \(X\) or \(Y\) variables used in the regression, then simply filter those NA rows out before plotting the residuals.

For example, imagine one of the rows in our biomass dataset included a missing value (“NA”) in the “nSpecies” variable, then we could use this code for producing the residual plot:

biomass.lm.augment %>%

filter(!is.na(nSpecies)) %>%

ggplot(aes(x = nSpecies, y = .resid)) +

geom_point(shape = 1) +

geom_abline(slope = 0, linetype = "dashed") +

xlab("Species richness") +

ylab("Residual") +

theme_bw()18.2.9 Transform the data

Let’s log-transform the response variable, creating a new variable log.biomass using the mutate function from the dplyr package:

Now let’s re-run the regression analysis:

And augment the output:

And re-plot the residual diagnostic plots:

log.biomass.lm.augment %>%

ggplot(aes(sample = .resid)) +

stat_qq(shape = 1, size = 2) +

stat_qq_line() +

theme_bw()

Figure 18.6: Normal quantile plot of the residuals from a regression of biomass stability (log transformed) on Species richness for 161 plots

log.biomass.lm.augment %>%

ggplot(aes(x = nSpecies, y = .resid)) +

geom_point(shape = 1) +

geom_abline(slope = 0, linetype = "dashed") +

xlab("Species richness") +

ylab("Residual") +

theme_bw()

Figure 18.7: Residual plot from a regression of biomass stability (log transformed) on Species richness for 161 plots.

Figure 18.6 shows that the residuals are reasonably normally distributed, and 18.7 shows no strong pattern of changing variance in the residuals along \(X\), and nor is there an obvious curved pattern to the residuals (which would indicate a non-linear association). We therefore proceed with the analyses using the log-transformed response variable.

18.2.10 Conduct the regression analysis

We have already conducted the main regression analysis above, using the lm function. We stored the output in the log.biomass.lm object. Now, we use the summary function to view the entire output from the regression analysis:

##

## Call:

## lm(formula = log.biomass ~ nSpecies, data = plantbiomass)

##

## Residuals:

## Min 1Q Median 3Q Max

## -0.97148 -0.25984 -0.00234 0.23100 1.03237

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 1.198294 0.041298 29.016 < 2e-16 ***

## nSpecies 0.032926 0.004884 6.742 2.73e-10 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.3484 on 159 degrees of freedom

## Multiple R-squared: 0.2223, Adjusted R-squared: 0.2174

## F-statistic: 45.45 on 1 and 159 DF, p-value: 2.733e-10What do we need to focus on in this output?

Under the “Estimate” heading, you see the estimated value of the Intercept (a) and of the slope (b), which is reported to the right of the predictor variable name (“nSpecies”).

There are two values of t reported in the table; one associated with the intercept, and one with the slope. These are testing the implied hypotheses that (i) the intercept equals zero and (ii) the slope equals zero. We are typically only interested in the slope.

At the bottom of the output you’ll see a value for the F statistic, just like you saw in the ANOVA tutorial. It is this value of F that you will report in statements about regression (see below).

We also see the P-value associated with the test of the zero slope null hypothesis, which is identical to the P-value reported for the overall regression (at the bottom of the output).

It is important to recognize that an overall significant regression (i.e. slope significantly different from zero) does not necessarily mean that the regression will yield accurate predictions. For this we need to assess the “coefficient of determination”, or \(R^2\) value (called “multiple R-squared” in the output), which here is 0.22, and represents the fraction of the variation in \(Y\) that is accounted for by the linear regression model. A value of this magnitude is indicative of a comparatively weak regression, i.e. one that does has predictive power, but not particularly good predictive power (provided assumptions are met, and we’re not extrapolating beyond the range of our \(X\) values).

Before getting to our concluding statement, we need to additionally calculate the 95% confidence interval for the true slope, \(\beta\).

18.2.11 Confidence interval for the slope

To calculate the confidence interval for the true slope, \(\beta\), we use the confint function from the base stats package:

?confintWe run this function on the lm model output:

## 2.5 % 97.5 %

## (Intercept) 1.11673087 1.27985782

## nSpecies 0.02328063 0.04257117This provides the lower and upper limits of the 95% confidence interval for the intercept (top row) and slope (bottom row).

18.2.12 Scatterplot with regression confidence bands

If your regression is significant, then it is recommended to accompany your concluding statement with a scatterplot that includes so-called confidence bands around the regression line.

If your regression is not significant, do not include a regression line in your scatterplot!

We can calculate two types of confidence intervals to show on the scatterplot:

- the 95% “confidence bands”

- the 95% “prediction intervals”

Most of the time we’re interested in showing confidence bands, which show the 95% confidence limits for the mean value of \(Y\) in the population at each value of \(X\). In other words, we’re more interested in predicting an average value in the population (easier), rather than the value of an individual in the population (much more difficult).

To display the confidence bands, we use the geom_smooth function from the ggplot2 package:

?geom_smoothLet’s give it a try, making sure to spruce it up a bit with some additional options, and including a good figure caption, shown here for your reference (this is the code you’d put after the “r” in the header of the R chunk:

fig.cap = "Stability of biomass production (log transformed) over 10 years in 161 plots and the initial number of plant species assigned to plots. Also shown is the significant least-square regression line (black solid line; see text for details) and the 95% confidence bands (grey shading)."plantbiomass %>%

ggplot(aes(x = nSpecies, y = log.biomass)) +

geom_point(shape = 1) +

geom_smooth(method = "lm", colour = "black",

se = TRUE, level = 0.95) +

xlab("Species richness") +

ylab("Biomass stability (log-transformed)") +

theme_bw()## `geom_smooth()` using formula = 'y ~ x'

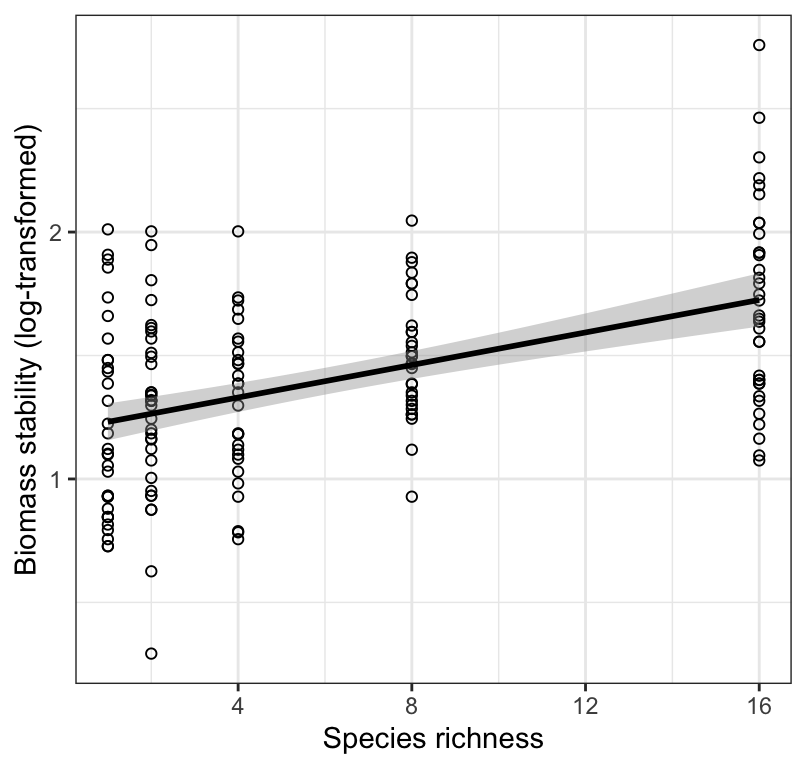

Figure 18.8: Stability of biomass production (log transformed) over 10 years in 161 plots and the initial number of plant species assigned to plots. Also shown is the significant least-square regression line (black solid line; see text for details) and the 95% confidence bands (grey shading).

There are a few key arguments for the geom_smooth function:

- the “method” argument tells ggplot to use a least-squares linear model

- the “se = TRUE” argument tells ggplot to add confidence bands

- the “level = 0.95” tells it to use 95% confidence level

- the “colour” argument tells it what colour to make the best-fit regression line

Here we can interpret the figure as follows:

Figure 18.8 shows that biomass stability (log-transformed) is positively and linearly related to the initial number of plant species assigned to experimental plots, but there is considerable variation that remains unexplained by the least-square regression model.

18.2.13 Concluding statement

Now that we have our new figure including confidence bands (because our regression was significant), and all our regression output (including confidence intervals for the slope), we’re ready to provide a concluding statement.

We simply report the sample size rather than the degrees of freedom in the parentheses.

As seen in Figure 18.8, Species richness is a significant predictor of biomass stability: Biomass stability (log) = 1.2 + 0.03(Species richness); F = 45.45; n = 161; 95% confidence limits for the slope: 0.023, 0.043; \(R^2\) = 0.22; P < 0.001). Given the relatively low \(R^2\) value, predictions from our regression model will not be particularly accurate.

It is OK to report the concluding statement and associated regression output using the log-transformed data. However, if you wanted to make predictions using the regression model, it is often desirable to report the back-transformed predicted values (see below).